Graphic Cards

NVIDIA and Microsoft Drive Innovation for Windows PCs in New Era of Generative AI

[ad_1]

Generative AI — within the type of giant language mannequin (LLM) purposes like ChatGPT, picture turbines akin to Steady Diffusion and Adobe Firefly, and sport rendering strategies like NVIDIA DLSS 3 Body Era — is quickly ushering in a brand new period of computing for productiveness, content material creation, gaming and extra.

On the Microsoft Construct developer convention, NVIDIA and Microsoft at the moment showcased a collection of developments in Home windows 11 PCs and workstations with NVIDIA RTX GPUs to fulfill the calls for of generative AI.

Greater than 400 Home windows apps and video games already make use of AI expertise, accelerated by devoted processors on RTX GPUs referred to as Tensor Cores. As we speak’s bulletins, which embody instruments to develop AI on Home windows PCs, frameworks to optimize and deploy AI, and driver efficiency and effectivity enhancements, will empower builders to construct the following era of Home windows apps with generative AI at their core.

“AI would be the single largest driver of innovation for Home windows prospects within the coming years,” mentioned Pavan Davuluri, company vice chairman of Home windows silicon and system integration at Microsoft. “By working in live performance with NVIDIA on {hardware} and software program optimizations, we’re equipping builders with a transformative, high-performance, easy-to-deploy expertise.”

Develop Fashions With Home windows Subsystem for Linux

AI growth has historically taken place on Linux, requiring builders to both dual-boot their programs or use a number of PCs to work of their AI growth OS whereas nonetheless accessing the breadth and depth of the Home windows ecosystem.

Over the previous few years, Microsoft has been constructing a robust functionality to run Linux straight throughout the Home windows OS, referred to as Home windows Subsystem for Linux (WSL). NVIDIA has been working carefully with Microsoft to ship GPU acceleration and help for the complete NVIDIA AI software program stack inside WSL. Now builders can use Home windows PC for all their native AI growth wants with help for GPU-accelerated deep studying frameworks on WSL.

With NVIDIA RTX GPUs delivering as much as 48GB of RAM in desktop workstations, builders can now work with fashions on Home windows that had been beforehand solely out there on servers. The big reminiscence additionally improves the efficiency and high quality for native fine-tuning of AI fashions, enabling designers to customise them to their very own model or content material. And since the identical NVIDIA AI software program stack runs on NVIDIA knowledge middle GPUs, it’s simple for builders to push their fashions to Microsoft Azure Cloud for giant coaching runs.

Quickly Optimize and Deploy Fashions

With educated fashions in hand, builders must optimize and deploy AI for goal gadgets.

Microsoft launched the Microsoft Olive toolchain for optimization and conversion of PyTorch fashions to ONNX, enabling builders to robotically faucet into GPU {hardware} acceleration akin to RTX Tensor Cores. Builders can optimize fashions by way of Olive and ONNX, and deploy Tensor Core-accelerated fashions to PC or cloud. Microsoft continues to put money into making PyTorch and associated instruments and frameworks work seamlessly with WSL to offer the perfect AI mannequin growth expertise.

Improved AI Efficiency, Energy Effectivity

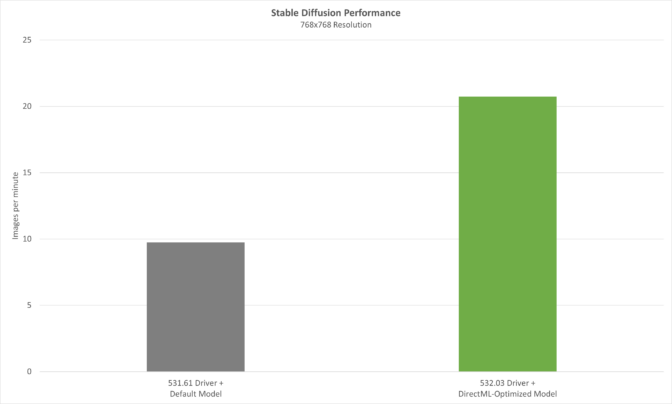

As soon as deployed, generative AI fashions demand unbelievable inference efficiency. RTX Tensor Cores ship as much as 1,400 Tensor TFLOPS for AI inferencing. During the last 12 months, NVIDIA has labored to enhance DirectML efficiency to take full benefit of RTX {hardware}.

On Could 24, we’ll launch our newest optimizations in Launch 532.03 drivers that mix with Olive-optimized fashions to ship massive boosts in AI efficiency. Utilizing an Olive-optimized model of the Steady Diffusion text-to-image generator with the favored Automatic1111 distribution, efficiency is improved over 2x with the brand new driver.

With AI coming to almost each Home windows utility, effectively delivering inference efficiency is important — particularly for laptops. Coming quickly, NVIDIA will introduce new Max-Q low-power inferencing for AI-only workloads on RTX GPUs. It optimizes Tensor Core efficiency whereas maintaining energy consumption of the GPU as little as attainable, extending battery life and sustaining a cool, quiet system. The GPU can then dynamically scale up for optimum AI efficiency when the workload calls for it.

Be a part of the PC AI Revolution Now

Prime software program builders — like Adobe, DxO, ON1 and Topaz — have already included NVIDIA AI expertise with greater than 400 Home windows purposes and video games optimized for RTX Tensor Cores.

“AI, machine studying and deep studying energy all Adobe purposes and drive the way forward for creativity. Working with NVIDIA we constantly optimize AI mannequin efficiency to ship the absolute best expertise for our Home windows customers on RTX GPUs.” — Ely Greenfield, CTO of digital media at Adobe

“NVIDIA helps to optimize our WinML mannequin efficiency on RTX GPUs, which is accelerating the AI in DxO DeepPRIME, in addition to offering higher denoising and demosaicing, sooner.” — Renaud Capolunghi, senior vice chairman of engineering at DxO

“Working with NVIDIA and Microsoft to speed up our AI fashions operating in Home windows on RTX GPUs is offering an enormous profit to our viewers. We’re already seeing 1.5x efficiency good points in our suite of AI-powered pictures modifying software program.” — Dan Harlacher, vice chairman of merchandise at ON1

“Our in depth work with NVIDIA has led to enhancements throughout our suite of photo- and video-editing purposes. With RTX GPUs, AI efficiency has improved drastically, enhancing the expertise for customers on Home windows PCs.” — Suraj Raghuraman, head of AI engine growth at Topaz Labs

NVIDIA and Microsoft are making a number of assets out there for builders to check drive high generative AI fashions on Home windows PCs. An Olive-optimized model of the Dolly 2.0 giant language mannequin is accessible on Hugging Face. And a PC-optimized model of NVIDIA NeMo giant language mannequin for conversational AI is coming quickly to Hugging Face.

Builders also can discover ways to optimize their purposes end-to-end to take full benefit of GPU-acceleration by way of the NVIDIA AI for accelerating purposes developer website.

The complementary applied sciences behind Microsoft’s Home windows platform and NVIDIA’s dynamic AI {hardware} and software program stack will assist builders rapidly and simply develop and deploy generative AI on Home windows 11.

Microsoft Construct runs via Thursday, Could 25. Tune into to study extra on shaping the way forward for work with AI.

[ad_2]

Source link